What is Open Source AI Gateway?

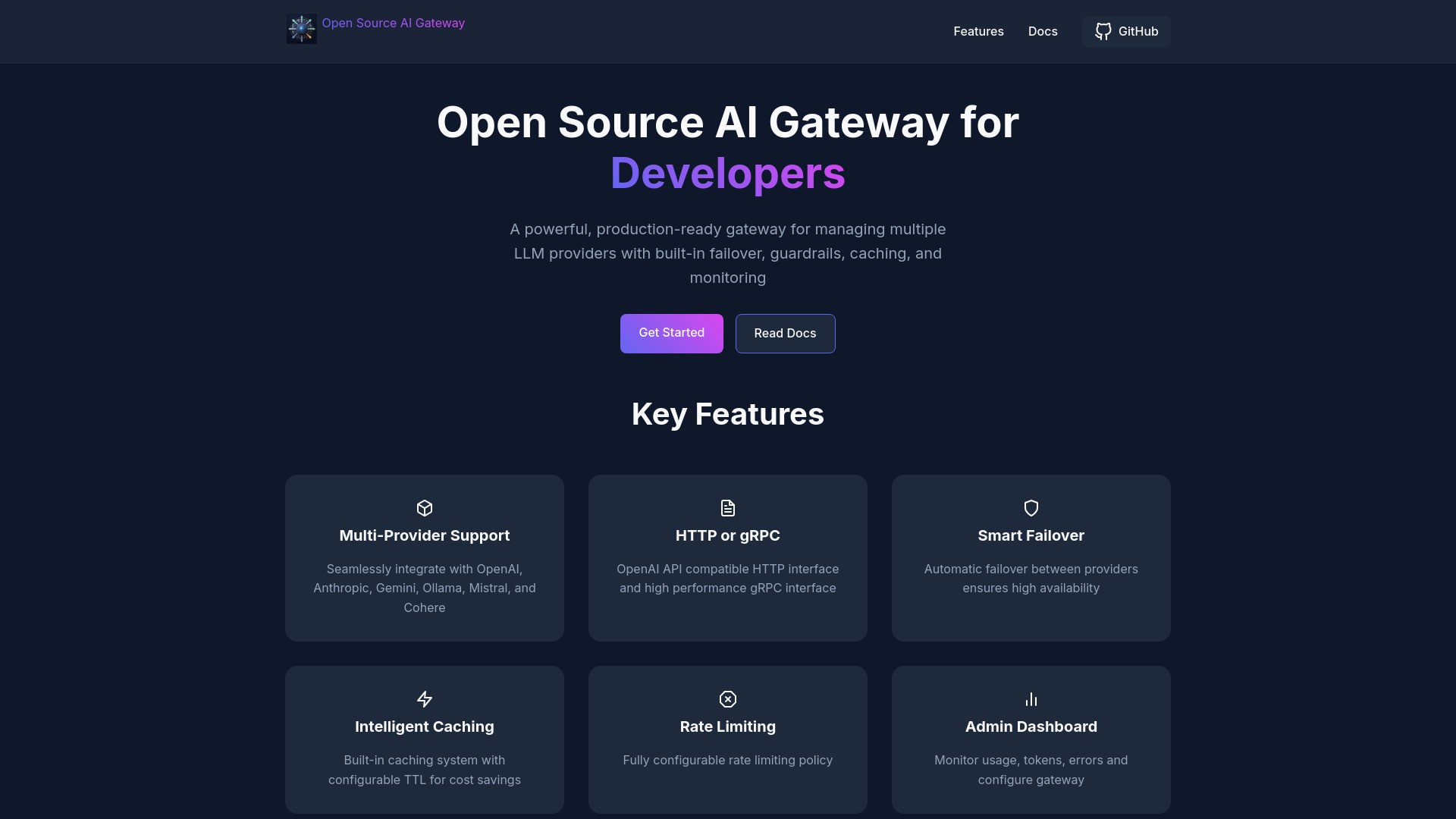

Open Source AI Gateway enables seamless integration and management of multiple LLM providers like OpenAI, Anthropic, and Cohere through a single configurable API interface, enhancing performance with built-in analytics, guardrails, caching, and administrative controls.

How to use Open Source AI Gateway?

To use Open Source AI Gateway, configure your API settings in a Config.toml file, run the gateway container with Docker, and begin making API requests through HTTP or gRPC.

Open Source AI Gateway's Core Features

Multi-Provider Support

HTTP or gRPC interfaces

Smart Failover

Intelligent Caching

Rate Limiting

Admin Dashboard

Content Guardrails

Enterprise Logging

Open Source AI Gateway's Use Cases

#1

Manage API requests to multiple LLM providers through a single interface.

#2

Automatically switch between LLM providers for high availability.

FAQ from Open Source AI Gateway

What LLM providers are supported by Open Source AI Gateway?

Is Open Source AI Gateway easy to configure?

How does the intelligent caching feature work?

Open Source AI Gateway Company

Open Source AI Gateway Company name:

Open Source AI Gateway

.

Open Source AI Gateway Github

Open Source AI Gateway Github Link:

https://github.com/wx-yz/ai-gateway